About Us

We are a team of researchers from the Human-AI Lab at the University of Michigan, working to develop new assistive technologies for blind and visually impaired people. Our goal is to make both the real world and the digital world more accessible! We have released apps for using everyday physical appliances such as microwaves, and understanding image content. We are also developing assistive technologies for real-world descriptions, visual information filters, image editing tools, 360° videos, and mixed reality.

Join us for our presentation at 1:00 to learn more about the mobile applications and research projects we are working on, or get involved with our research by filling out this interest form!

Presentation at VISIONS 2024

We are excited to present at VISIONS 2024, a vendor fair of technology services for blind, visually impaired, and physically disabled people hosted by the Ann Arbor District Library (AADL)!

In addition to our booth at table 20, you can attend our talk at 1:00 in the Downtown Multi-Purpose Room. More details, including a link to livestream the presentation, are available on the event page from the AADL.

Get Involved with Our Research!

If you are interested in getting involved with our studies, please fill out this interest form!

We aim to work directly with blind and visually impaired people to get feedback on the tools we develop. We often recruit people for paid research studies to talk about accessibility challenges, talk about design ideas, or test new prototype technologies to get feedback. These studies are sometimes conducted in our lab on the University of Michigan campus in Ann Arbor, and sometimes conducted remotely over a video call. If you are personally interested in participating, or know people who might be interested, please fill out the interest form linked above. You can also contact us directly at humanailab@umich.edu.

Our Projects

Our research group develops new assistive technologies in a variety of areas. The projects listed here are a sample of our work! To learn more, visit humanailab.com.

VizLens

VizLens is an app for reading physical applicances such as microwaves or washing machines without raised braille stickers. VizLens first uses the phone's camera to identify buttons on the appliance. Then, users can move their finger over the appliance, and the app will speak out the button that is underneath their finger.

ImageExplorer

ImageExplorer aims to help people understand image content with AI. ImageExplorer provides a text summary of image content, but also provides a touch interface, where users can move their finger over their touchscreen to explore the spatial layour of the image and get more details about specific peices of information. Users can customize settings to focus on information that they are interested in.

BrushLens

BrushLens is a new phone case prototype that provides a workaround for people with visual or motor imparments to access inaccessible touchscreen kiosks. The BrushLens phone case is swiped across a touchscreen and it automatically detects and presses intended buttons.

OmniScribe

OmniScribe is a project aimed at making 360 degree videos more accessible with audio descriptions. Audio descriptions for these immersive videos can include spatial aspects that change as the person watching moves their head.

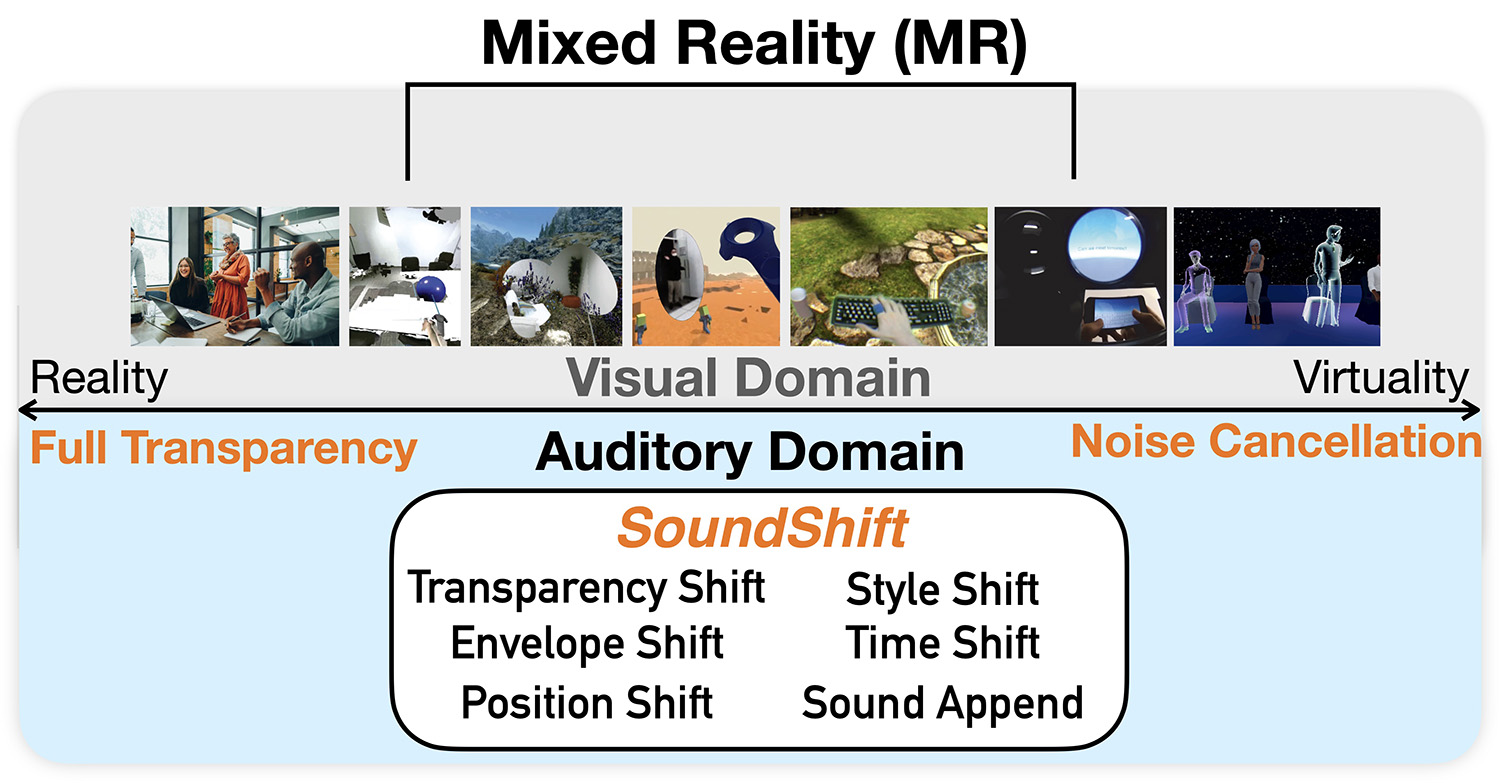

SoundShift

SoundShift is a research concept aimed at enhancing users' awarenes of sounds in both digital and real-world environments. We developed a set of methods for customizing audio output to new situations.

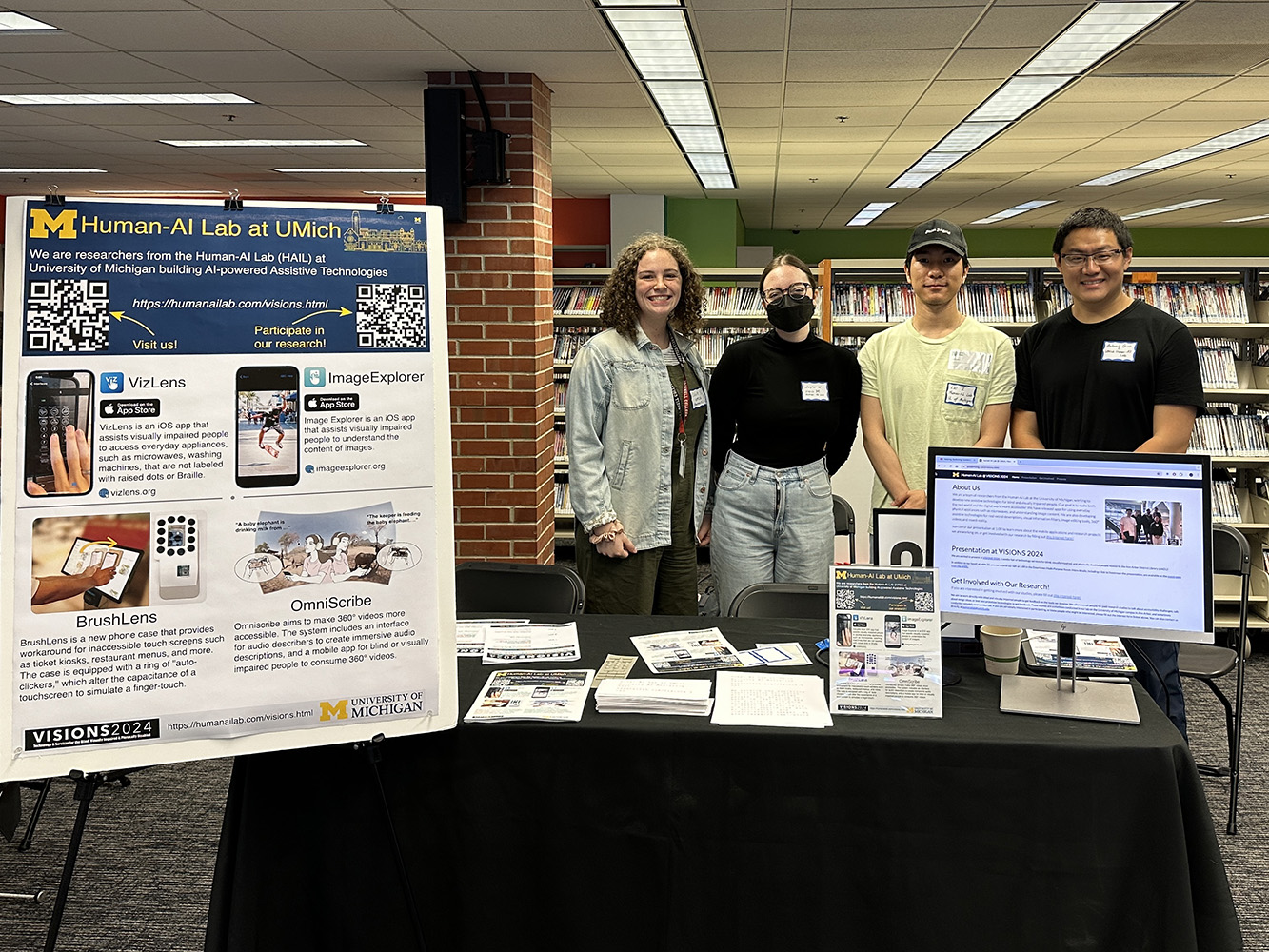

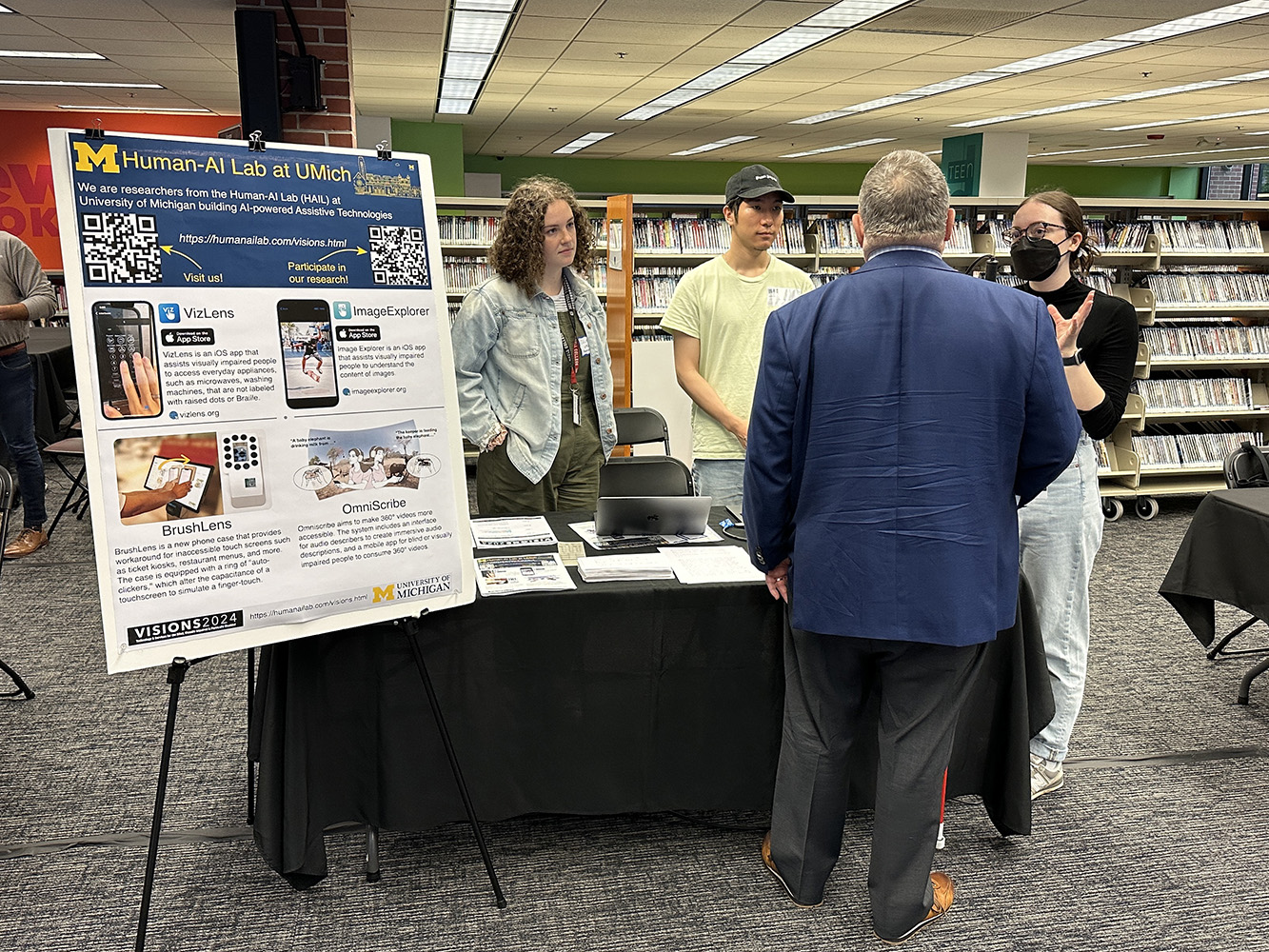

At the Event!

Here are photos from the event.